Oculus reveals DeepFocus, an open source AI renderer for varifocal VR

VentureBeat | December 19, 2018

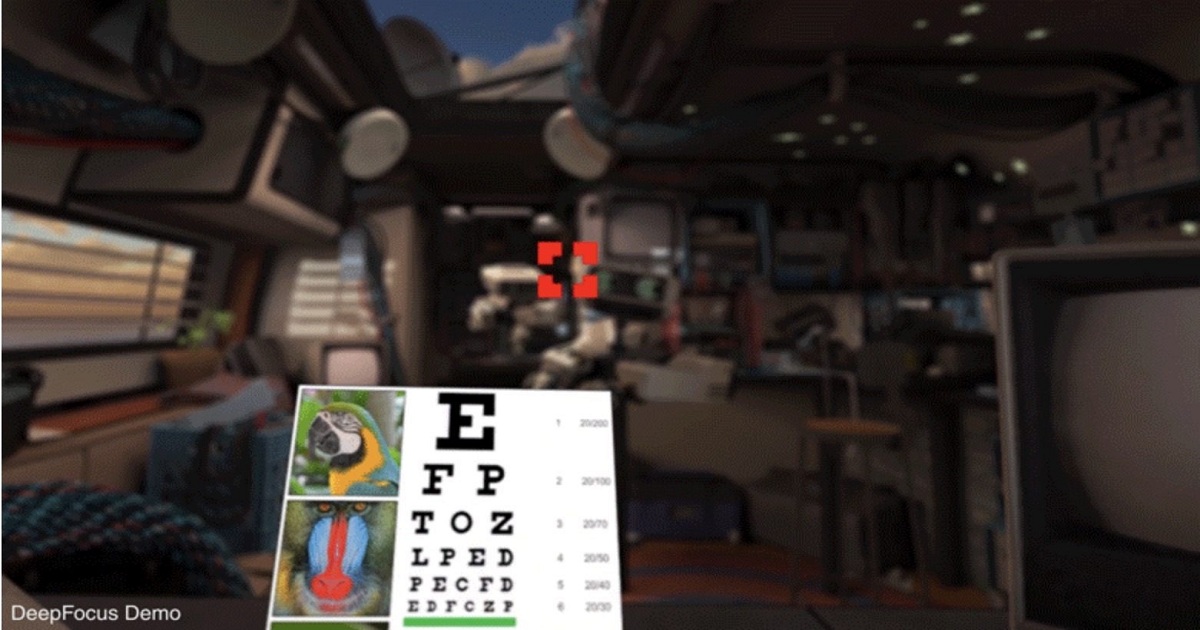

When Facebook’s Oculus unit revealed a next-generation prototype VR headset called Half Dome in May, one of its signature features was a “varifocal” display system that actually moved the screens inside to mimic focus changes in the user’s eyes. Now the company has revealed DeepFocus, the software rendering system used to power the varifocus feature, along with an interesting announcement: DeepFocus’ code and data are being open-sourced so researchers can learn how it works, and possibly improve it.While the details of DeepFocus are technical enough to be of interest only to developers, the upshot is that Oculus’ software uses AI to create a “defocus effect,” making one part of an image appear much sharper than the rest. The company says the effect is necessary to enable all-day immersion in VR, as selective focusing reduces unnecessary eye strain and visual fatigue.Oculus says that DeepFocus is the first software capable of generating the effect in real time, providing a realistic, gaze-tracking blurring of everything the user isn’t currently focusing on. The key word there is “realistic,” as prior blurring systems developed for games have been developed for flashiness or cinematographic effects, not accuracy, and Half Dome’s mission “is to faithfully reproduce the way light falls on the human retina.”